Research for Real-World Impact

Our areas of research are defined based on our mission around sovereignty, human empowerment and responsibility. We extend what’s possible on a fundamental level, adding to the state-of-the-art to enable the most complex and critical AI use-cases.

Capable AI

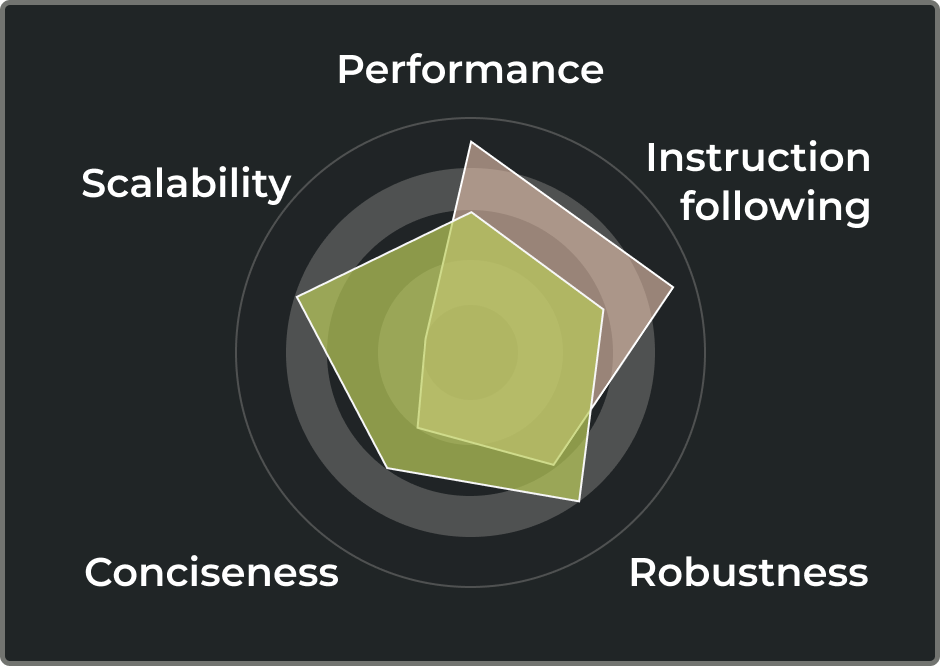

We develop AI built for production environments that balance the needs of raw performance, scalability, and robustness – thus meeting the standards of industry leaders beyond proof-of-concept implementations.

We innovate foundational model designs and training approaches with the goal of continuously raising the bar on innovation or sovereignty. We care about compliant, efficient models focused on a wide range of languages and are working to advance foundation model architectures combined with integration patterns beyond chatbots.

Accessible

We make AI accessible for every organization even in data-scarce contexts such as low-resource languages, multimodality and specialised enterprise knowledge.

We develop methods to improve transparency, fairness and efficiency across languages and domains, allowing for better performance when less data is available or compute budget is restricted.

Transparent

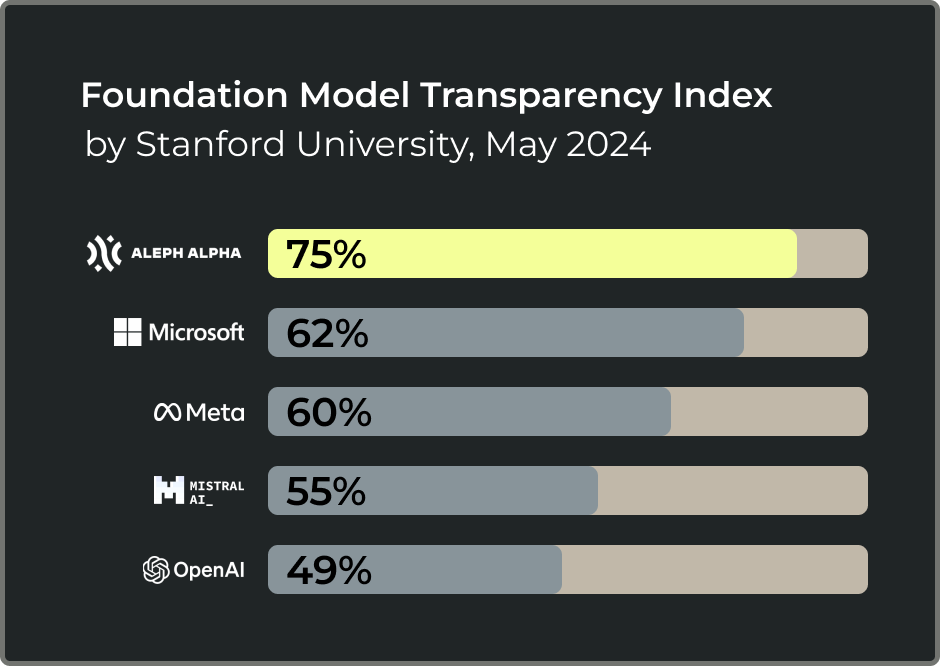

Transparency is at the core of our mission. We ensure sovereignty for our customers by openly sharing how our technology works, from in-depth development publications – such as papers and blog posts – to the source-available approach of our stack.

Without transparency, organizations struggle to assess AI suitability and compliance, particularly in regulated environments.

Trustworthy

The black-box nature of GenAI makes it difficult for humans to take responsibility, especially in regulated sectors where a thorough understanding of model behavior is critical. We develop methods for inspecting, understanding, and validating responses, putting the human at the center.

Controllable

We design AI systems with safety and control at their core, empowering users to tailor outputs to their unique needs and preferences in real time.

Rejecting a one size fits all approach, we prioritize diversity of options in AI outputs, enabling seamless customization for each use-case and user. Our customers are in control of their distinct values, beliefs, cultural nuances, and stylistic choices – all without requiring extensive model retraining.

Interactive

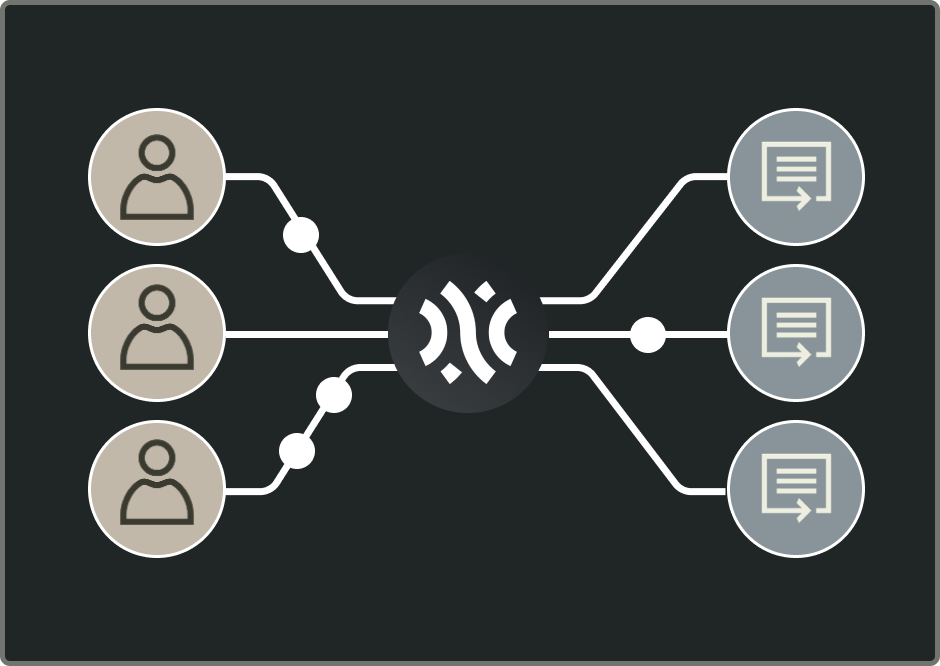

We design AI systems not only for benchmarks but with human compatibility at their core, ensuring they align with users’ needs and expectations for seamless integration.

Through human-centred research, our technology adapts to real-world workflows and improves clarity and usability with innovative human-machine interaction patterns tailored to tasks beyond chatbots and questions that don’t have easy answers.

Innovation Highlights

Research Partnerships and Collaborations

We are partnering with established research organizations to develop cutting-edge AI innovations

Join Our Open-Source Community

We welcome every contribution, value collaboration, and embrace constructive feedback. Join us to enhance our models, share ideas, and help shape the future of AI together.